conscious-sapphire•6h ago

Message not being added to LLM prompt

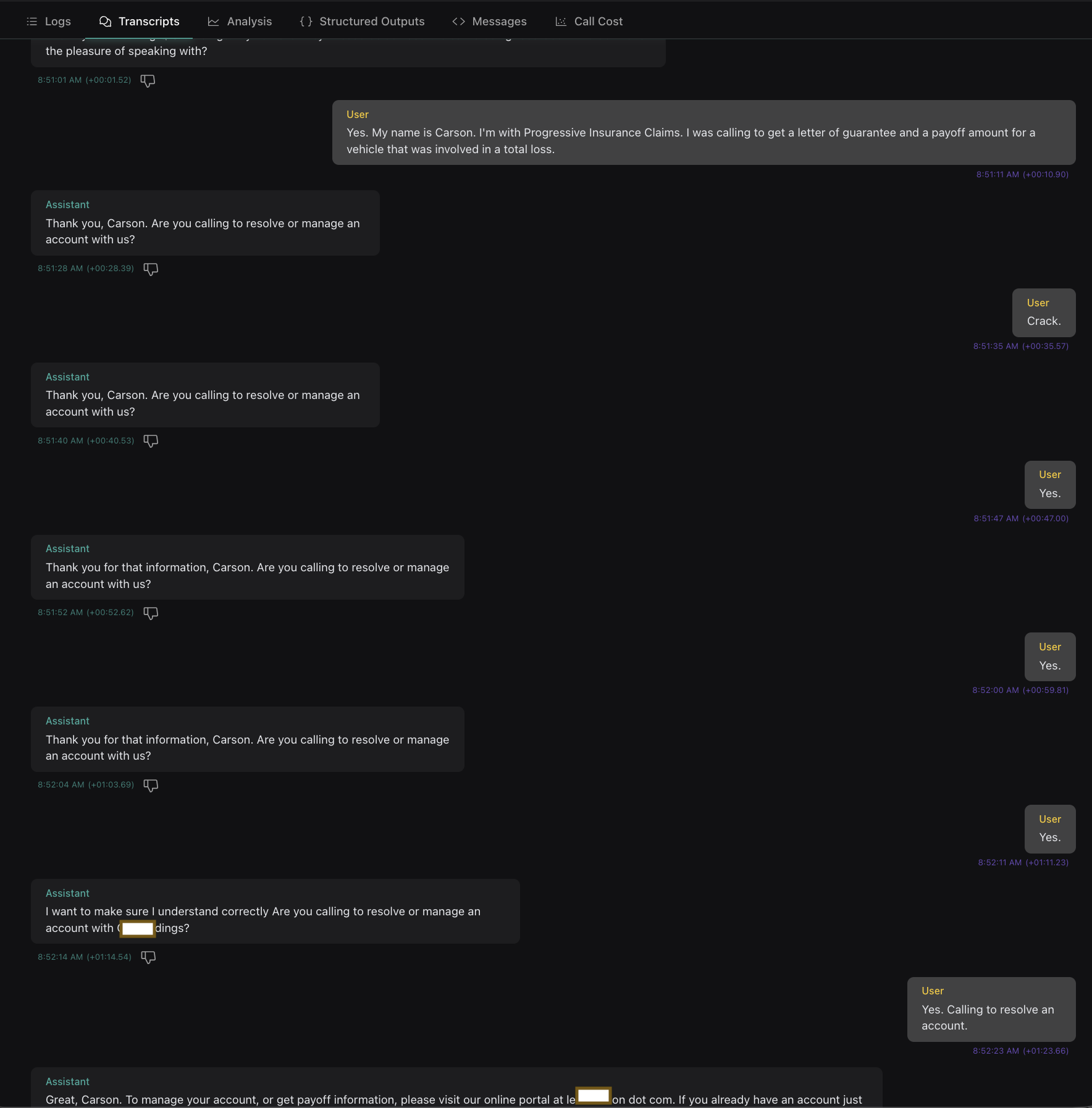

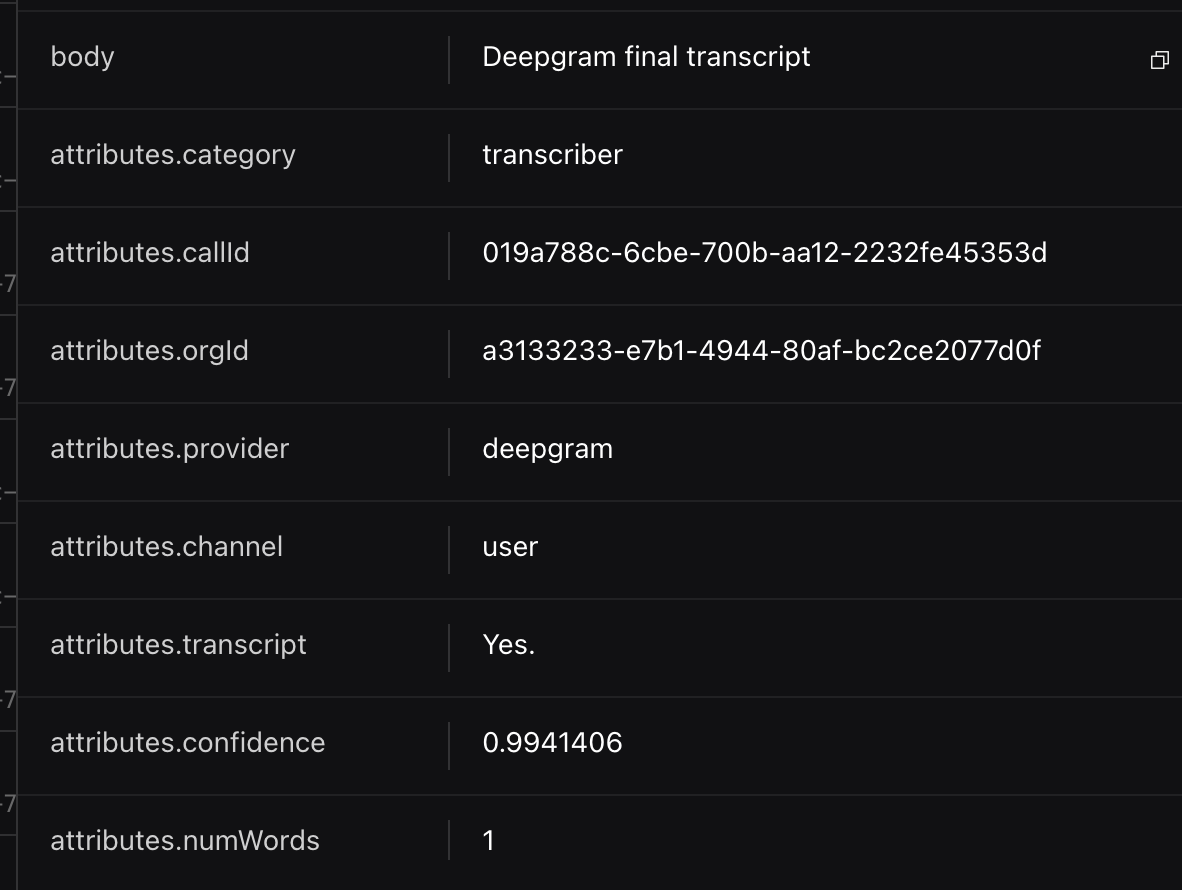

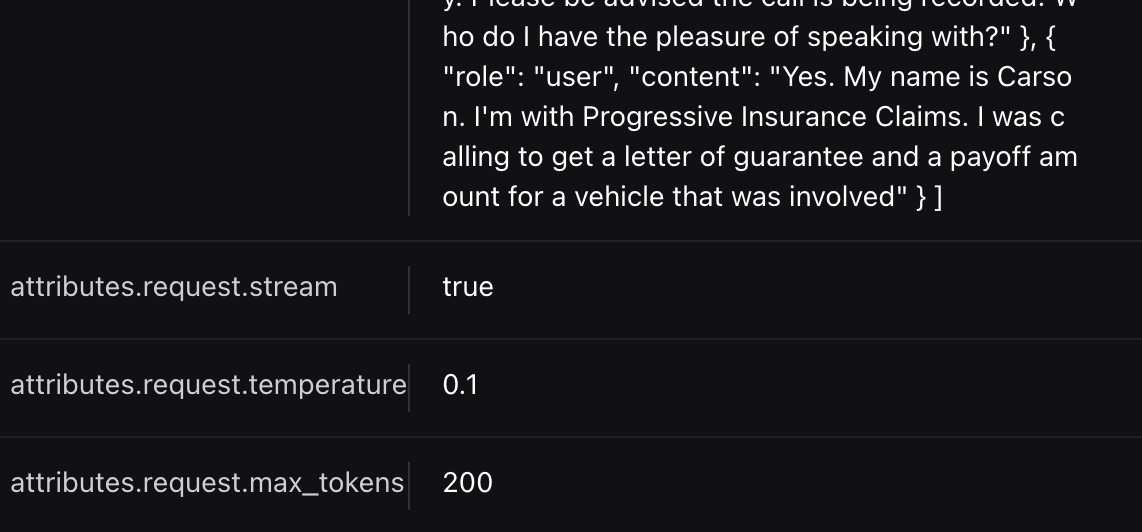

I had a call the other day where the user was asked a question, they clearly answered "yes", the transcriber transcribed as "yes" with a confidence of .99..., but when the LLM call is made the user message (yes) is not appended to the prompt causing the LLM to respond with the same question again. Happened three times in a row for the same call.

Screenshot of the call transcript is attached, as is the detailed log showing the transcription result and accuracy along with the next log where the LLM is called WITHOUT the user message that was accurately transcribed.

This feels like a system issue, not a configuration issue, please advise.

Call ID: 019a788c-6cbe-700b-aa12-2232fe45353d

2 Replies

Suggestion:

Since the Vapi system is designed to stream transcribed user input directly into the LLM prompt in real time, a failure in this step (as you described) points to a possible system-level orchestration issue. The best next step is to report this behavior to Vapi support with your call logs and transcript details for further investigation, as this is not expected and cannot be resolved via configuration.

For more on how the message flow should work, see the Vapi quickstart documentation. Source: - Vapi Quickstart Documentation

Since the Vapi system is designed to stream transcribed user input directly into the LLM prompt in real time, a failure in this step (as you described) points to a possible system-level orchestration issue. The best next step is to report this behavior to Vapi support with your call logs and transcript details for further investigation, as this is not expected and cannot be resolved via configuration.

For more on how the message flow should work, see the Vapi quickstart documentation. Source: - Vapi Quickstart Documentation

⤵️ Mention @Vapi Support Bot for follow-ups 🐥

conscious-sapphireOP•6h ago

How do I report this to Vapi support?