Call Latency Question (Average Turn Latency 1292ms vs. Real Experience)

Hello,

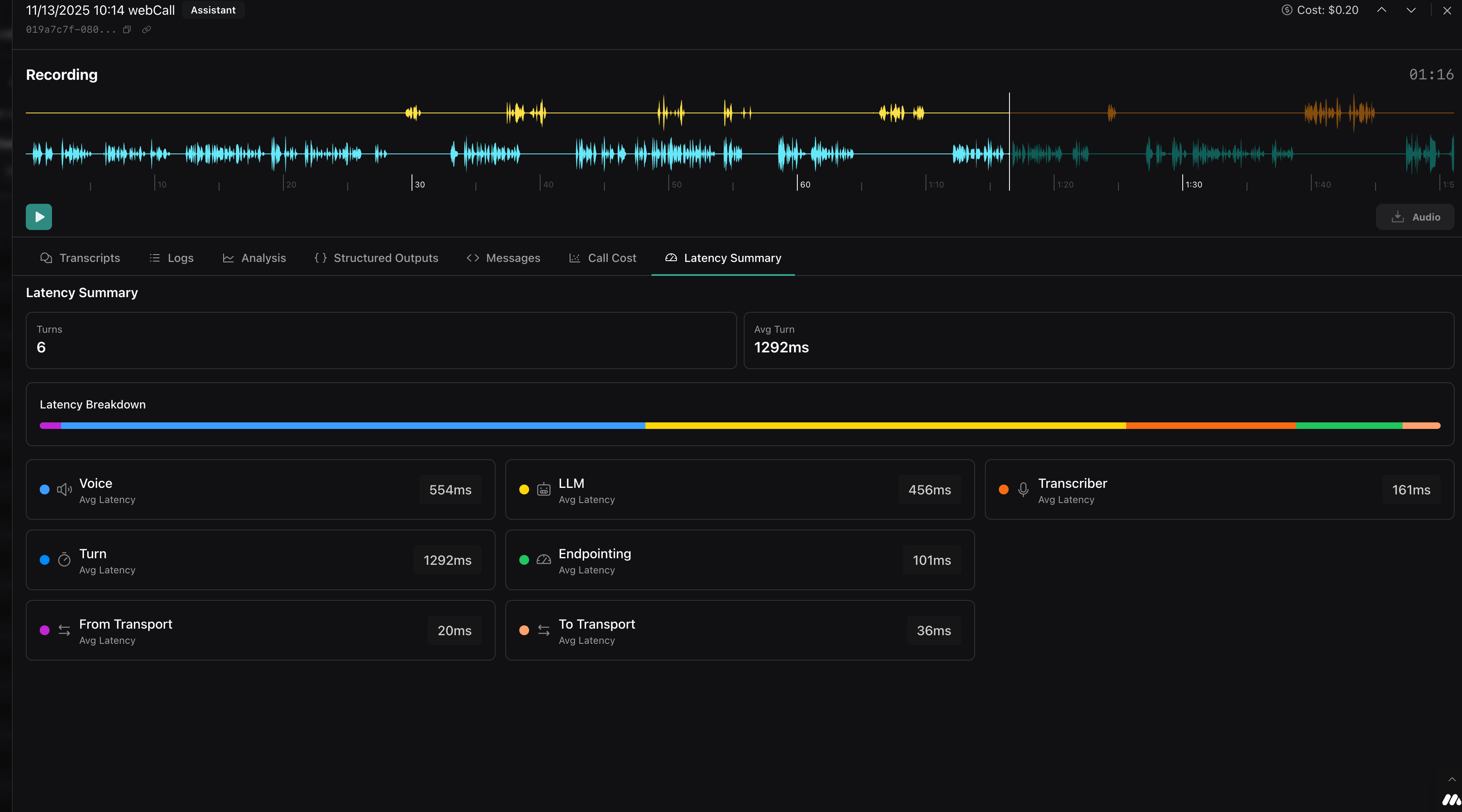

I have a question regarding call latency in my recordings (see attached screenshot).

In the Latency Summary section, it shows me Average Turn Latency 1292ms. However, when listening to a real recording, the actual response latency (from the end of the user's call to the start of the AI's response) is subjectively close to 3 seconds.

My questions are:

Why is there such a difference between the measured value of 1292ms and the actual real-time latency that I hear?

How can I effectively reduce the overall response latency? Which of the components (Voice, LLM, Transcriber, Endpointing) have the greatest potential for optimization?

Context and Goal:

For comparison: when testing voice models (e.g. via Google AI Studio with Gemini Flash Live), latency is often less than one second and transcription is virtually flawless. I would like to achieve similar speed and fluency within VAPI.

Do you have any specific recommendations for setup, model selection, or configuration that would help me minimize this latency and achieve near-instantaneous and smooth conversation?

I have a question regarding call latency in my recordings (see attached screenshot).

In the Latency Summary section, it shows me Average Turn Latency 1292ms. However, when listening to a real recording, the actual response latency (from the end of the user's call to the start of the AI's response) is subjectively close to 3 seconds.

My questions are:

Why is there such a difference between the measured value of 1292ms and the actual real-time latency that I hear?

How can I effectively reduce the overall response latency? Which of the components (Voice, LLM, Transcriber, Endpointing) have the greatest potential for optimization?

Context and Goal:

For comparison: when testing voice models (e.g. via Google AI Studio with Gemini Flash Live), latency is often less than one second and transcription is virtually flawless. I would like to achieve similar speed and fluency within VAPI.

Do you have any specific recommendations for setup, model selection, or configuration that would help me minimize this latency and achieve near-instantaneous and smooth conversation?