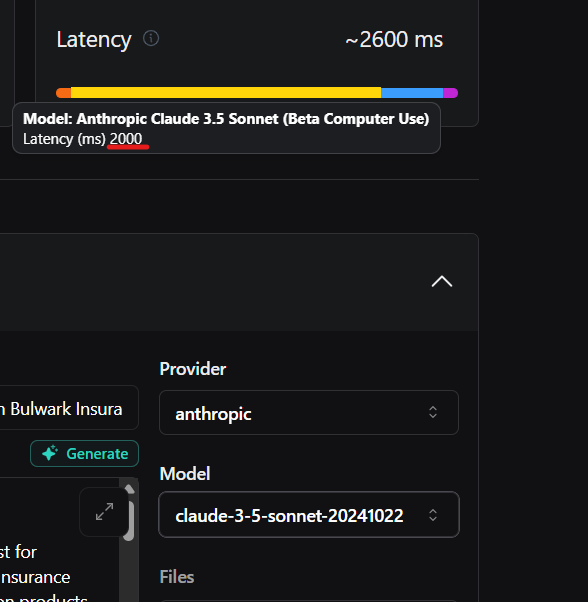

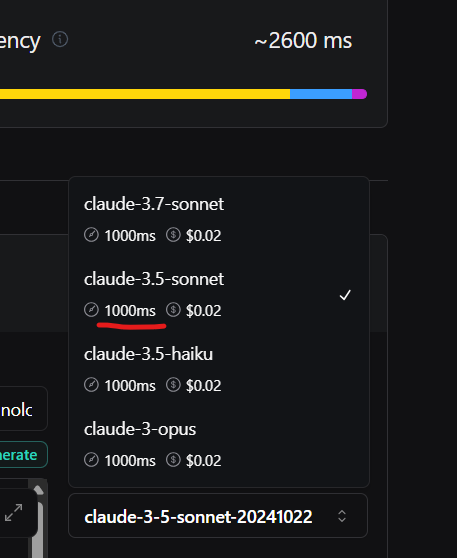

AI Provider Latency Mysteriously Increased

The latency for all AI providers have increased and affected my agents. Please see attachment. It appears that the listed 1000ms is not reflected in the breakdown, which shows as 2000ms. My agents have gone from 1200ms to 2600ms without any changes made.