Unexpected fallback to OpenAI model instead of Groq (llama3-70b-8192)

Hello,

We’re running multiple tests with different models, and so far the best results come from the Groq integration using the llama3-70b-8192 model.

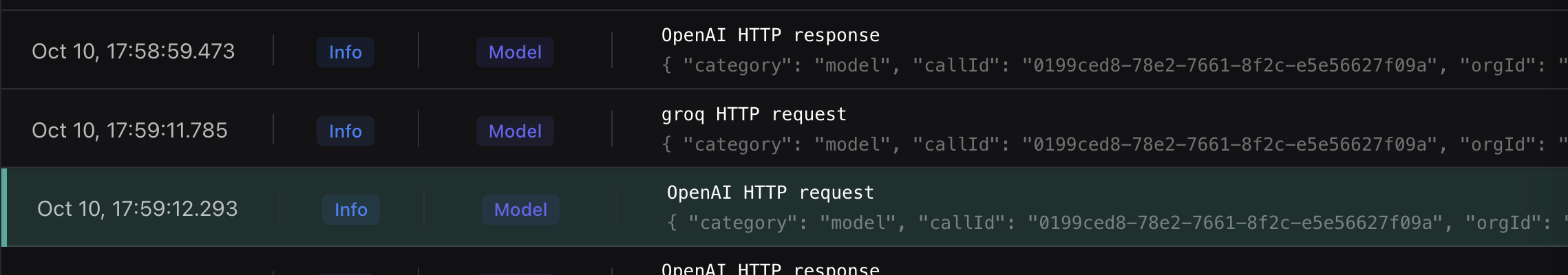

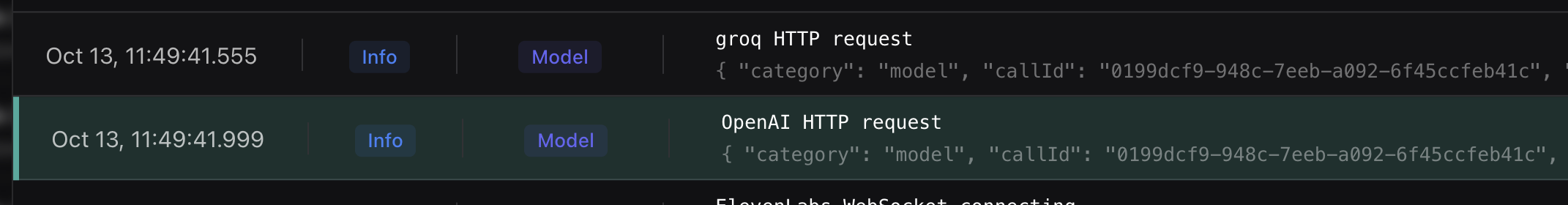

The main issue is that sometimes the model works very well, but occasionally VAPI falls back to OpenAI’s gpt-4o-2024-08-06, which is significantly slower.

My questions are:

• Why is it calling OpenAI if this assistant is not configured for it?

• How can we define which model to fall back to?

• How can we set our Groq API key? There’s no option for that in the integration dashboard.

Call ID: 0199ced8-78e2-7661-8f2c-e5e56627f09a

Thanks in advance for your help.

We’re running multiple tests with different models, and so far the best results come from the Groq integration using the llama3-70b-8192 model.

The main issue is that sometimes the model works very well, but occasionally VAPI falls back to OpenAI’s gpt-4o-2024-08-06, which is significantly slower.

My questions are:

• Why is it calling OpenAI if this assistant is not configured for it?

• How can we define which model to fall back to?

• How can we set our Groq API key? There’s no option for that in the integration dashboard.

Call ID: 0199ced8-78e2-7661-8f2c-e5e56627f09a

Thanks in advance for your help.