First Response Latency

I’ve noticed a consistent latency issue on all my calls on the first user question. Specifically, after the user finishes speaking, there is a noticeable delay (around 3 seconds in the example) before the model request becomes active. Subsequent responses are much faster, which suggests this might be related to the initial session setup or model initialization.

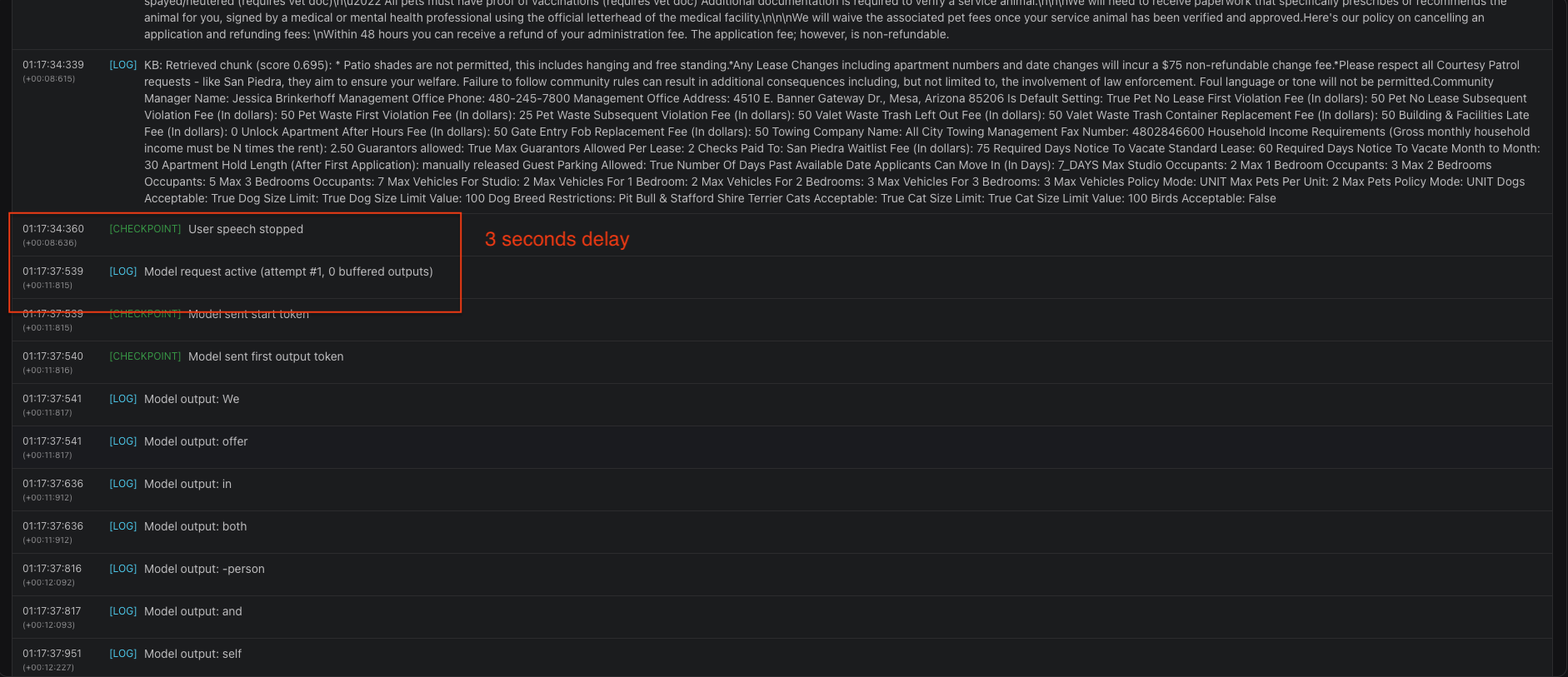

From the logs (see attached image), the delay occurs after endpointing detection and KB retrieval but before the first model request starts. My assumption is that the initial model load could be the cause.

I’d appreciate your guidance on why this happens. It doesn’t seem to be related to KB retrieval, and I’d also like to know if there are best practices to avoid this, perhaps by adjusting settings or using a different model ?

Additionally, I was wondering if sending a placeholder tool request that triggers at the beginning of the call could help "make the model ready". Is this a viable approach?

Example call IDs:

From the logs (see attached image), the delay occurs after endpointing detection and KB retrieval but before the first model request starts. My assumption is that the initial model load could be the cause.

I’d appreciate your guidance on why this happens. It doesn’t seem to be related to KB retrieval, and I’d also like to know if there are best practices to avoid this, perhaps by adjusting settings or using a different model ?

Additionally, I was wondering if sending a placeholder tool request that triggers at the beginning of the call could help "make the model ready". Is this a viable approach?

Example call IDs:

- 6e6f1c1f-814b-48a7-8222-97fff942963e

- 89d6d8fd-d989-406b-9944-309c27105eed