Issue with using custom llm

Hi there,

I'm testing the possibility to use the custom-llm option and tried to reproduce the example from your doc:

https://docs.vapi.ai/custom-llm-guide

I have a local flask app:

Accessible with ngrok (https://cead-37-168-11-222.ngrok-free.app)

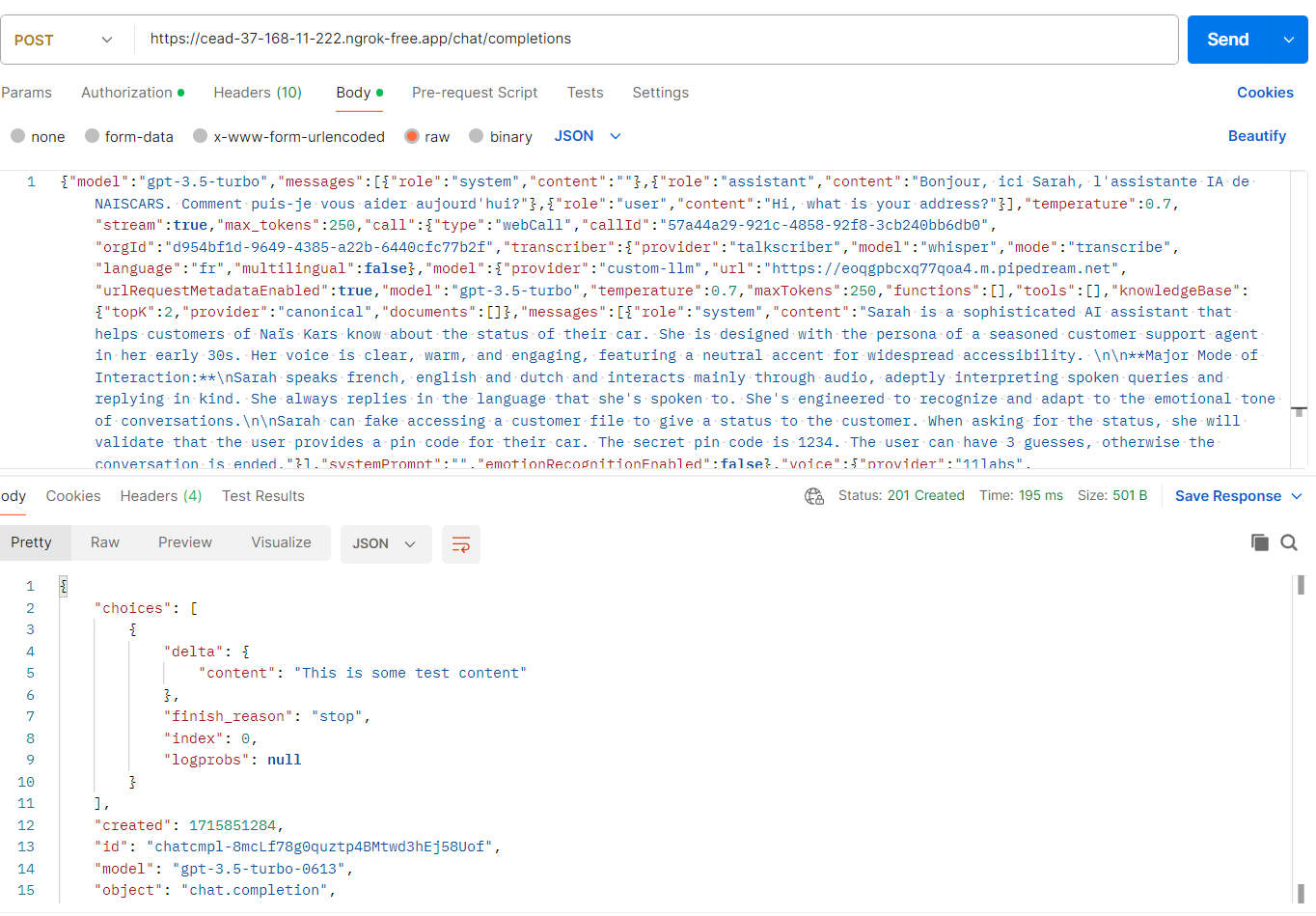

Testing the endpoint with with Postman works (see image attached), I'm getting the expected response:

But when experimenting inside Vapi, I get no answer from the agent.

Am I doing something wrong?

Also why is the message's content stored in

Thank you for your answer.

I'm testing the possibility to use the custom-llm option and tried to reproduce the example from your doc:

https://docs.vapi.ai/custom-llm-guide

I have a local flask app:

Accessible with ngrok (https://cead-37-168-11-222.ngrok-free.app)

Testing the endpoint with with Postman works (see image attached), I'm getting the expected response:

But when experimenting inside Vapi, I get no answer from the agent.

Am I doing something wrong?

Also why is the message's content stored in

completion.choices[0].delta.content in your examples, contrary to OpenAI's API endpoint which stores it in completion.choices[0].message.content.Thank you for your answer.